Speech recognition and speech artificial intelligence (AI) are a new return to “voice-first” communication. Thought leaders and AI experts herald the concept of voice-first communication as the next big wave — a return to the rich experiences we all receive when speaking voice-to-voice with each other.

Voice is naturally one of the most intuitive forms of communication; communities verbally passed down memories, culture, and history through stories before the written word.

Voice-first has evolved from much more than an Alexa app or Siri command; its principles rely on the fact that voice-first is much more powerful, user-friendly, immersive, and faster than traditional digital interfaces. A core component of “voice first” is speech recognition, the ability of a machine or program to identify words and phrases. In this article, we’ll look at the definition of speech recognition, how artificial intelligence has revolutionized speech recognition, and real-world applications of speech recognition AI.

What Is Speech Recognition?

According to TechTarget, “Speech recognition is the ability of a machine or program to identify words and phrases in spoken language and convert them to a machine-readable format1.” In 1952, Bell Laboratories developed the “Audrey” system to recognize a single voice speaking digits aloud. A decade later, technology giant IBM developed “Shoebox,” a program that understood and could respond to 16 different English words.

By the 1980s, speech recognition technology advanced from a handful of words to thousands. In the 1990s, the rapid adoption of the personal computer led to an explosion in speech technology, and by 2001, speech recognition technology was close to 80% accurate.

The history of modern-day speech technology begins with the launch of Google Voice Search in the mid-2000s2. Google’s development of voice search brought voice technology into the hands of everyday consumers, and Apple’s development of Siri in 2011 ushered in a new area of voice technology.

How Has AI Revolutionized Speech Recognition?

Like many industries, the adoption and creation of artificial intelligence has revolutionized speech recognition, reducing costs, improving customer services, and helping businesses maintain and define competitive advantages. Riding the wave of AI-focused patents, companies are beginning to develop their proprietary voice-powered technologies to drive more positive customer experiences. For example, Bank of America launched Erica, the first widely available virtual financial assistant, in 2018 and surpassed 1.5 billion client interactions in June 20233. Erica’s quick growth and adoption signal the increasing comfort level of consumers with voice analysis and technology. Bank of America clients have spent 3 million hours interacting with the assistant, increasing 31% year-over-year.

Real-life business applications of speech recognition technology include chatbots, voice search, natural language generation, and sentiment analysis. From automating inspections on manufacturing lines to analyzing large chunks of data to determine positive or negative sentiment, speech recognition AI applies to various industries and technologies.

Growth of Speech Recognition AI

Like with many AI technologies, the future is now. In 2024, the number of voice-enabled devices worldwide will equal the global human population — roughly 8 billion4. Just a handful of years later, in 2030, the global voice assistant market is projected to hit over $14 billion. Similarly, chatbots may experience an even higher higher compound annual growth rate (CAGR). In 2023, the global chatbot market was estimated at $5 billion and will increase by 300% by 20285.

Real-World Usage of Speech Recognition AI

Voice Assistants

Amazon’s Alexa, Google Assistant, and Apple’s Siri are voice assistants most consumers have used before. Within three years, an estimated 50% of humans will use voice assistants monthly, with millennials and Gen Z more likely to interact regularly6.

Customer Satisfaction and Self-Service

Proactive and personalized customer engagement can improve client satisfaction and enhance their ability to answer questions, solve problems, and more. Ninety-one percent of customers would want to use self-service tools, and after using them, they report a 19% higher satisfaction level7.

Translation Services and Conversational AI

Services like Google Translate translate speech quickly between different languages, and conversational tools like IBM’s Watson Assistant help businesses create their own conversational interfaces.

Transcription of Audio and Video Meetings

Tools like Otter.ai take AI-generated voice meeting notes and provide real-time transcriptions for meetings8.

Assistive Technology for the Physically Challenged

Unaided communication systems will utilize the physical body to convey messages. For people with speech or audio impairments, speech recognition technology will improve communication and make technology more accessible.

The Benefits of AI in Speech Recognition

Why are speech enhancement and de-noising important in IoT and personal mobile devices? Especially in loud environments, speech enhancement helps people communicate more effectively and efficiently by removing the background noise.

Enhanced Team Collaboration

Speech recognition AI helps remove language barriers and enhances team cohesion and collaboration9. Clear, crisp audio improves understanding and comprehension, allowing global teams to work better together.

Improved Effectiveness

In crowded public spaces like busy call centers or coffee shops, background noise can interfere with the effectiveness of speech as callers can’t hear each other or run the risk of misunderstanding. Callers won’t need to repeat themselves multiple times or spend cognitive energy trying to decipher words from excess background noise.

How Ambiq Contributes

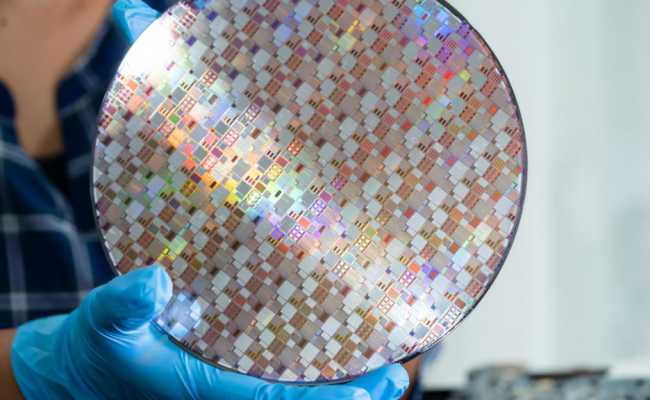

At the core of chatbots, voice assistants, and other speech recognizing devices is a silicon chip running overtime to perform the power-consuming of AI and Speech recognition. With our advanced Sub-threshold Power Optimized Technology (SPOT®) platform and system-on-chips (SoCs) Ambiq helps edge devices like these perform speech recognition at unseen levels of efficiency and ultra-low power. Additionally, with the introduction of our Neural Network Speech Enhancement (NNSE) to neuralSPOT’s ModelZoo, speech background noise can now be removed on devices in real time, allowing clean voice capture in an array of noisy environments. From voice memo recording to voice chat to speech recognition, NNSE is optimized to operate on IoT edge devices with minimal latency and energy utilization.

Sources

1 Speech Recognition | September 2021

2 A short history of speech recognition | 2023

3 BofA’s Erica Surpasses 1.5 Billion Client Interactions, Totaling More Than 10 Million Hours of Conversations | July 13, 2023

4 Virtual Assistant Technology – statistics & facts | June 7, 2023

6 Voice Assistants in 2023: Usage, growth, and future of the AI voice assistant market | January 13, 2023

7 Customer Experience: Creating value through transforming customer journeys | Winter 2016

8 Otter.ai | 2023

9 The rise of AI speech enhancement & better team collaboration | December 17, 2020