In three words — energy-efficient computing.

To successfully implement edge AI, manufacturers must achieve a precise equilibrium between computing performance, memory capacity, and power consumption. Devices need sufficient processing capability to execute sophisticated AI models locally while maintaining power efficiency that allows for extended operation on limited battery resources.

This balance sounds straightforward in theory but presents significant challenges in practice. Manufacturers frequently encounter several critical obstacles:

· Energy Consumption Barriers: Many AI implementations drain power at unsustainable rates, rendering devices impractical for everyday use. Even the most innovative solutions fail to gain user acceptance when batteries require constant recharging.

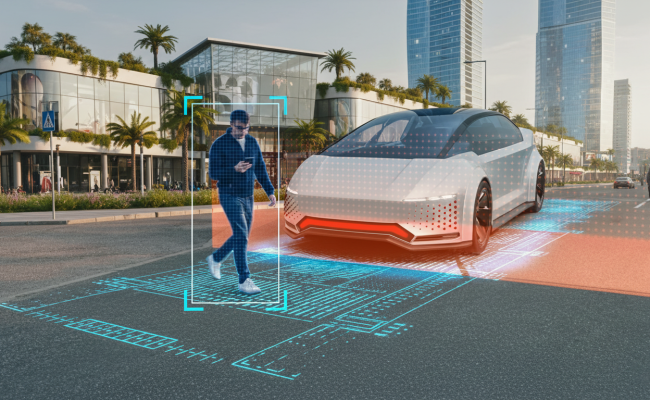

· Computational Limitations: Insufficient processing power creates bottlenecks that prevent real-time analysis, forcing devices to transmit data elsewhere for processing and defeating the core advantages of Edge AI architecture.

· Market Differentiation Challenges: When technical constraints force manufacturers to compromise on AI capabilities, the resulting products often lack distinctive features that would set them apart from competitors, leading to commoditization and reduced market value.

The implementation of effective Edge AI requires precise optimization. Devices must incorporate sufficient computational resources to execute complex machine learning algorithms directly—whether analyzing environmental sensor data, processing voice commands, or detecting motion patterns—while maintaining operational independence from cloud infrastructure and preserving battery longevity.