heliaRT

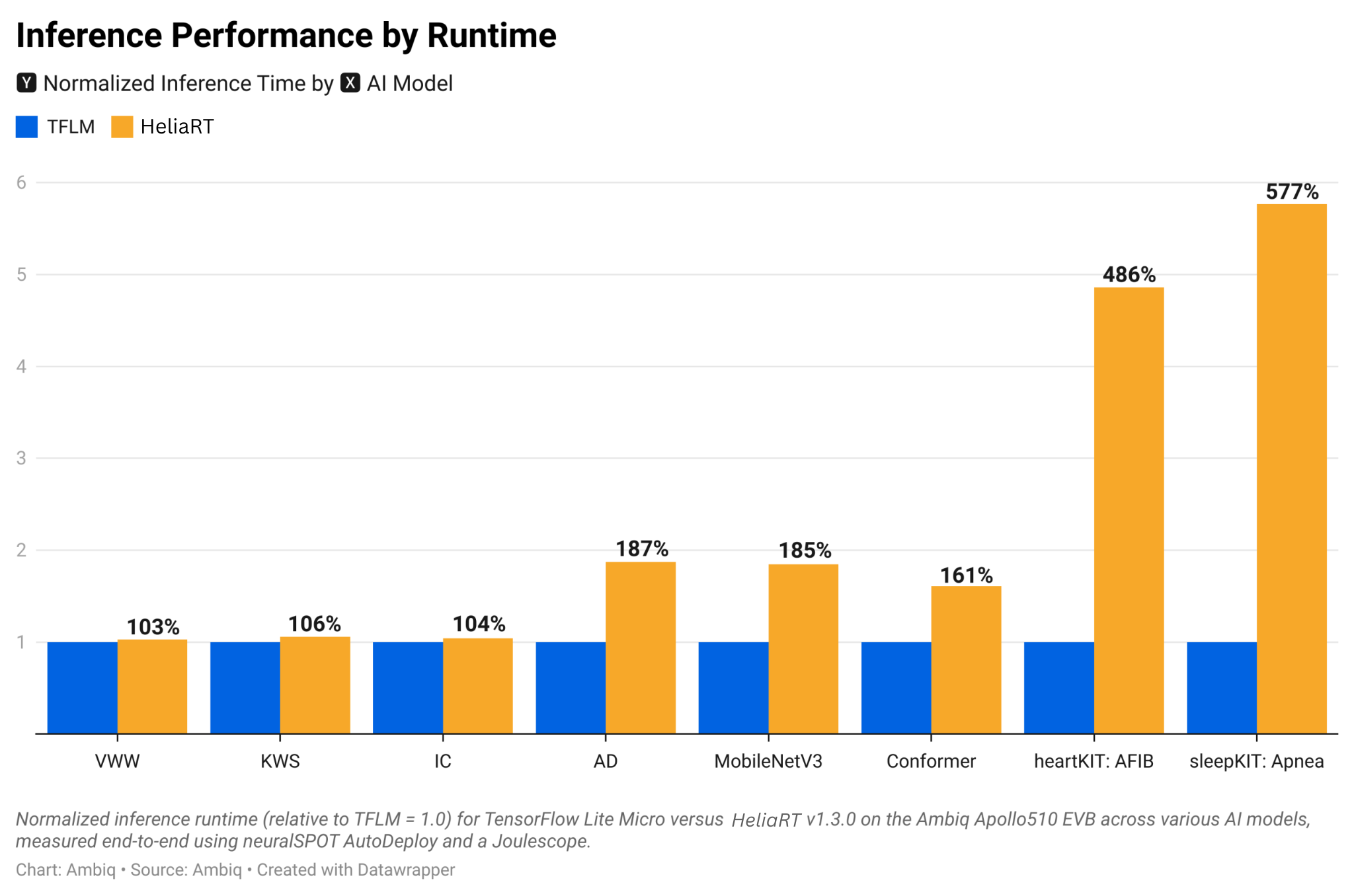

Ultra-Efficient Runtime

heliaRT is an ultra-efficient AI runtime built on TensorFlow Lite for Microcontrollers, optimized for Ambiq’s Apollo family of ultra-low-power SoCs. It simplifies development and accelerates deployment of high-performance, energy-efficient AI applications at the edge.