In today’s fast-paced, connected world, speed matters, especially when it comes to intelligence. Whether you’re talking to a virtual assistant, relying on a smartwatch for health alerts, or monitoring an industrial sensor for preventive maintenance, the time it takes for data to be processed, known as latency, can determine whether performance is smooth or frustratingly delayed.

What Is Latency?

Latency is the time delay between an input and the corresponding response in a system.

Every digital interaction has latency. The key question is how much?

Low latency can make a device feel seamless, intuitive, and almost predictive. High latency, on the other hand, can turn even the smartest system into a sluggish bottleneck, leading to miscommunications, missed alerts, or operational inefficiencies. For example:

- When you say, “Turn on the lights,” your smart speaker must capture your voice, send the audio to the cloud, wait for the server to interpret it, and then send the command back. Even a delay of 300 milliseconds (0.3 seconds) can make it feel less responsive.

- In contrast, if that processing happens on the device (using Edge AI), the command executes instantly — no waiting, no lag, and no internet dependency.

Another example is in health monitoring wearables:

- With high latency (cloud-based processing), your device might take seconds to detect abnormal heart rhythms or blood oxygen levels.

- With low-latency Edge AI, alerts are processed locally and instantly, enabling timely health insights that could even save lives.

Why Latency Matters to Edge AI

For AI applications, real-time decision-making is crucial. Latency affects not just user experience but also safety, efficiency, and reliability. The value isn’t just in making decisions—it’s in making them at the exact moment they’re needed. Edge devices often operate in dynamic environments where conditions can change rapidly, and any processing delay can affect how effectively the system responds.

That means latency has a direct influence on performance, shaping everything from user satisfaction to operational outcomes. In practical terms, reducing latency helps AI models interpret data more accurately, adapt to real-time inputs, and deliver consistent results even when cloud connectivity is limited or unavailable.

Here’s how latency plays out in a few different scenarios:

| Scenario | With High Latency (Cloud AI) | With Low Latency (Edge AI) |

| Voice Assistants | Noticeable delay after voice command | Instant response, natural conversation |

| Smart Cameras | Slower object detection | Real-time tracking and recognition |

| Wearables | Delayed fitness or health feedback | Instant analysis and alerts |

| Industrial IoT Sensors | Slower system response | Immediate anomaly detection for safety |

| Autonomous Systems | Risk of delayed reaction | Real-time situational awareness |

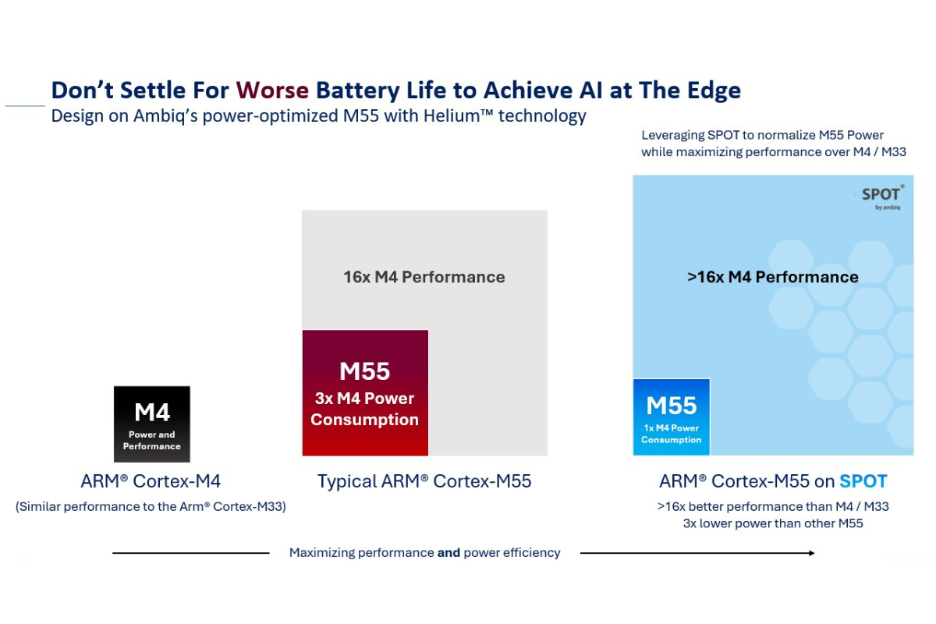

The Power Challenge: Performance vs. Energy

Running AI on the device delivers real-time responsiveness, but it also exposes a critical constraint: computation consumes energy, and edge devices already operate with extremely limited power budgets. Wearables, sensors, and always-on endpoint devices rely on tiny batteries or energy-harvesting systems, where even small increases in power drawing can shorten battery life or disrupt continuous operation.

Cloud AI adds another layer to the problem. Sending raw data to the cloud for processing requires continuous wireless communication, which often consumes far more power than local computation. So even if the cloud handles the “heavy lifting,” the energy cost of repeatedly transmitting data can drain a battery much faster than performing inference on-device. For many real-time or always-on applications, round-trip communication is both energy-inefficient and unsustainable.

Traditional processors weren’t designed to navigate this tradeoff. While they can execute AI workloads, they do so inefficiently, generating excess heat, requiring larger batteries, or forcing developers to dial back model complexity to conserve power.

This is where an energy-efficient semiconductor design becomes essential. With an ultra-low-power semiconductor, devices can run meaningful AI models locally, reducing or even eliminating the need to constantly export data to the cloud. The result:

- Lower power consumption due to fewer radio transmissions

- Longer battery life, even with continuous sensing

- Smaller, sleeker devices that don’t need oversized batteries

- More reliable performance, independent of network availability

In other words, Edge AI isn’t just faster, it’s fundamentally more energy-efficient, and the right semiconductor technology is what makes that efficiency possible at scale.

How Ambiq Enables Ultra-Low Latency at the Edge

Ambiq’s ultra-low power semiconductor solutions are redefining what’s possible for AI at the edge. Built on its patented Subthreshold Power Optimized Technology (SPOT®) platform, Ambiq’s Ultra-low power Apollo System on Chips (SoCs) provides high-performance AI inference while consuming only a fraction of the power of traditional processors.

What this means in practice:

- Voice assistants that respond instantly — even offline

- Wearables that analyze biometrics in real time without draining the battery

- Smart sensors that detect and respond to changes the moment they happen

Ambiq’s platform and technologies allow device manufacturers to achieve real-time responsiveness with weeks or months of battery life, unlocking new possibilities for consumer, healthcare, smart home, automotive, and industrial markets alike.

Latency Meets Efficiency: The Future of AI

As the world shifts toward on-device intelligence, latency becomes the defining measure of performance. Edge AI powered by Ambiq’s ultra-low power semiconductor solutions ensures that intelligence happens faster, locally, and efficiently.

Whether it’s your watch detecting heart irregularities, your security camera identifying motion, or your voice assistant understanding you in real time, the future of AI is immediate, and it’s running at the edge.

For more, read Ambiq’s latest white paper on enabling lab-grade biosignal analytics directly at the edge, providing real-time metrics for the next generation of wearable preventive and medical applications.