In a recent interview with SafetyDetectives, Scott Hanson, the Founder and CTO of Ambiq, delved into the creation and evolution of the SPOT platform, which focuses on building the world’s most energy-efficient chips. Originating from Hanson’s time as a PhD student at the University of Michigan, where he developed tiny systems for medical implants, the SPOT platform utilizes Sub-threshold Power Optimized Technology to achieve unprecedented energy efficiency.

Hanson discussed the platform’s departure from conventional digital chip designs, emphasizing the significant energy savings achieved by operating at low voltages. The interview covered Ambiq’s role in addressing technical challenges in the IoT industry, emphasizing the crucial aspects of power efficiency and security. Hanson also expressed his optimism about the future of ultra-low power technology, foreseeing continuous improvements and a surge in compute power for IoT devices in the next 5-10 years.

Can you describe the journey that led to the creation of the SPOT platform?

Hi, my name is Scott Hanson, and I’m the founder and CTO of Ambiq.

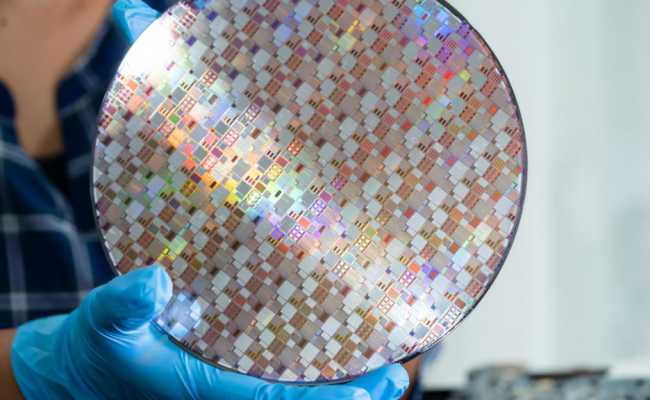

Ambiq is a company that builds the world’s most energy-efficient chips. We’re putting intelligence everywhere; that is really the tagline. We want to make chips with such low power that we can embed little microprocessors and everything in your clothing, paint on the walls, bridges we drive over, pet collars, etc.

The company is built around a technology that we call SPOT, or Sub-threshold Power Optimized Technology. It’s a low-power circuit design technology platform from my time at the University of Michigan. I was a PhD student there, and we were building tiny systems for medical implants.

When I say tiny, I mean really small. We’re talking about one cubic millimeter containing a microprocessor, a radio, an antenna, sensors, and a power source. When you’re building a system like that, the first thing you figure out is that the battery’s tiny, so the power budget that corresponds to that battery must also be tiny.

We got to thinking in terms of picowatts and nanowatts, right? We usually think about watts and kilowatts in the normal world, but we had to think about picowatts and nanowatts. When we did that, we could build these cubic millimeter systems; they could be implanted in the eyes of glaucoma patients to measure pressure fluctuations. It was exciting that this SPOT platform made all of that possible.

During that project, I started to see a lot of interest from companies in the technology, and it got me thinking that this technology had commercial potential. I remember riding in an elevator at the University of Michigan in the Computer Science building and realizing that the technology would be commercialized one day, and I needed to be the person to do that.

Shortly afterward, I started Ambiq with my two thesis advisors At Michigan, and we managed to raise some money. Then we launched our first few products, and here we are 13 years later, having shipped well over 200,000,000 units of our SPOT-enabled silicon. It’s been a really fun journey.

How does the SPOT platform fundamentally differ from traditional microcontroller technology?

I’m going to avoid giving you the full Circuits 101 lecture here. Conventional digital chips are not just microcontrollers but any chip signals digital ones and digital zeros using voltage.

A digital zero might be 0 volts, and a digital one will be a much higher voltage at 1 or 1.2 volts. That higher voltage is chosen because it’s easy to distinguish between zero and one. It’s also chosen because 1 or 1.2 volts is much higher than the turn-on voltage of the transistor.

Every modern chip is basically made of transistors, the fundamental building block.

We’ve got billions of transistors on these chips, which look like little switches. Think of them like a light switch. When the voltage applied to that transistor is above a turn-on voltage, what we call the threshold voltage, it turns on. A drop below the threshold voltage, and it turns off. You can see how you can string these things together and get devices that signal zeros and ones.

If you look at your Circuits 101 textbook, they teach you to apply a voltage well above the threshold voltage to turn on voltage, and it functions as a proper digital switch. Pretty much every chip out there operates in that way. However, at Ambiq, with SPOT, we ignore that convention. We represent the 1 with a much lower voltage; think of 0.5, 0.4, or 0.3 volts. If that voltage is below the turn-on voltage of the transistor, we call that sub-threshold. If it’s at or near that turn-on voltage, it’s called near threshold.

It turns out that by shrinking what a digital one is, you get some huge energy savings. Energy equals voltage squared, so it’s quadratic with voltage, and therefore, you get this huge energy reduction by operating at low voltage.

That comes with all kinds of idiosyncrasies, and it’s what’s kept all the other companies away. Transistors start behaving weirdly but still operate like a transistor or switch, which becomes tough to manage. Our SPOT platform is about dealing with those idiosyncrasies or those sub-threshold challenges, and it works.

The sub-threshold and near-threshold technology has been around for decades. Ambiq was the first to really commercialize it widely, and our timing was perfect. About ten years ago, as the company was getting off the ground, the Internet of Things (IoT) was just starting to explode. Battery-powered devices were going everywhere, and there was this insatiable hunger for low power. The SPOT platform came along at the right time, and we’ve solved a lot of power problems, but the need for more compute continues to grow.

AI is popping up everywhere. We’re seeing that it’s not just in the cloud but also beginning in the edge devices like the ones we serve, such as consumer wearables or smart home devices. AI is straining the power budgets of all these devices, and that means that we’ve got to continue to innovate and release new products that are lower power.

What are the environmental implications of the widespread adoption of ultra-low power technologies?

Lower power is good for the environment, and it’s a green technology. If I have power needs that are that big, with low power technology, I can reduce the need to a lower amount, that’s good. However, the truth is a little bit more complex than that.

What tends to happen is that our customers don’t necessarily take advantage of consuming less energy by having smaller batteries and charging less often. Instead, they tend to add new functions. They’ll say, you have a more power-efficient processor? Then, I’ll add more stuff to that same power budget. So, the power footprint of the world is not really decreasing; it’s just that we’re able to get more done in that same power footprint.

It’s probably a wash in terms of environmental impact. That said, the IoT as a whole has some pretty fantastic potential for the environment. As we put sensors all over our homes and buildings, we could put climate sensors all over the world. We have a better sense of what’s going on in the world, whether it’s climate change and we’re able to track that, or it’s how much energy this building is using, and whether we are leaving lights on in a hallway where there’s nobody, there’s nobody present.

So there’s a potential to use the Internet of Things to dramatically reduce our energy usage, meaning manage buildings, energy consumption, managed homes, and energy consumption better, but also get a better sense of what’s happening in the world.

So I think there is the potential for our technology to be used in a very positive way.

But most of our customers tend to be using it just to kind of get more out of their existing power footprint.

What are the biggest technical challenges facing the IoT industry today?

Power is one of them, and it’s one that we’re addressing diligently every day. We want to put billions of devices everywhere, and you don’t want to be changing billions of batteries? So, having a low-power platform like SPOT is really critical.

Security is a growing concern. Io T has been developed very quickly and often without a proper eye toward security needs. The average person has tens of IoT devices in their home now, and they are collecting all kinds of intimate data about us. It’s collecting health information and movement patterns in my home. We’re seeing virtual assistants constantly capturing our speech to listen for the “Alexa” keyword or the “OK Google” keyword, and that’s not stopping, right?

There’s this insatiable appetite for more AI; deep neural networks are exploding everywhere. We’re going to see this constant need for processing in the cloud, which means we’re sending data up to the cloud.

That’s a privacy problem, right?

There are many ways to handle that. There’s a lot of good, interesting security hardware security software popping up. However, I’m going to say that probably the most effective solution is pretty simple – don’t send as much data to the cloud.

Do most of the processing at the edge, such as on the smartwatch, smart thermostat, and the Echo device in your house.

There’s no need to send all the data up to the cloud. It can be processed locally. It turns out that’s a power problem, too. If I say that instead of sending 100% of the raw sound data from an Amazon Echo up to the cloud, we’ll only send the 1% that’s interesting enough to send, it means there needs to be local processing. It must be done on the smartwatch, thermostat, Echo, or devices with sensors capturing the data.

That’s a power problem, especially as the neural networks running to support these use cases are getting bigger. Fortunately, SPOT is a great solution. We’re doing a lot of architectural innovation to ensure our customers can run big, beefy neural networks locally and on devices like this. I’m confident that we can attack that problem. However, I foresee a few years of rocky security problems here in the next few years.

How do you see the role of AI and machine learning in the evolution of IoT devices?

What I think is going to happen is that we’ll see a migration of AI from purely a data center thing to the edge devices, which means wearables, smart home devices, your car, or devices with sensors.

We’re seeing our customers run lightweight activity tracking or biosignal analysis on wearables. They’re embedding virtual assistants in everything, whether it’s a wearable, hearable, or smart home appliance. In all these cases, there’s a balance between the edge and the cloud. The neural network can have a lightweight front end that runs locally; if it identifies something interesting, it then passes it to the cloud for further analysis.

What I’m really excited about is the potential for large language models (LLM), such as chatGPT, that are trained with enormous amounts of data, largely from the Internet. However, they don’t have eyes and ears; they’re not in the real world understanding what’s happening. That’s the role of the edge devices, your wearables, smart home devices, or smart switches. Those devices are constantly capturing information about us.

If they could run lightweight neural networks locally to identify activities or events of interest, they could ship those to the cloud to a ChatGPT-like model. Imagine if your wearable monitors your vitals, heart rate, breathing, and talking, and it identifies trends of interest and sends those up to the AI.

I’m not talking about megabytes or even gigabytes of the data it’s constantly collecting. I’m talking about sending a few little snippets – a few little observations. For example, you had a high activity date today, or sleep was not very good last night. You send that up to the cloud, and then you can ask it more useful questions like – Hey, I haven’t been feeling great lately, what’s wrong? The AI would be able to answer with something along the lines of I’ve been watching you for the last six months, and I see that your sleep has been irregular. You need to get more regular sleep, and here’s what you can do to fix that, right?

There are countless examples of things like this where edge devices can collaborate with large language models in the cloud to achieve fantastic results.

Now, there are obvious security problems there. We just talked about how security problems are one of the major challenges facing IoT. That’s no different here, and it’s a problem that needs to be managed. However, if you do most of the processing locally, we can effectively manage the security issues. I think between the edge and the cloud, there’s a way to address the security problems that pop up there. And I think there’s real power in what can be achieved for the endusers.

How do you see ultra-low power technology evolving in the next 5-10 years?

The good news is that I see it improving with really no end in sight. We’re going to see far more compute power packed into shrinking power budgets.

Moore’s law is alive and well for the embedded world. We’re at a process node today that’s 22 nanometers. The likes of Qualcomm, Intel, and others are down below 5 nanometers, so we’ve got a long way to go to catch up to them.

Moore’s law is going to deliver all kinds of gains. That means faster processors and lower power processors. We’re also doing a ton of innovation on the architecture, circuits, and software. I don’t see an end to power improvement, certainly not in the next decade.

Just look at how far Ambiq has come in the last ten years. We have this family of SoCs called Apollo. The first one was launched in 2014 and ran at 24 MHz. It had a small processor and less than 1 MB of memory. Our latest Apollo4 processors have many megabytes of memory. They run at nearly 200 MHz. They have GPUs and USB interfaces, consuming 1/8 of the power of our initial product. So we’re getting dramatically faster, dramatically low power that will continue.

If you just extrapolate those numbers going forward, we’re going to have an amazing amount of compute power for all your IoT devices, and that’s exciting.

I don’t 100% know exactly what we’ll do with all that compute, but I do know that I’ve got customers asking me every day for more processing power, lower power, and they’re going to be doing some pretty exciting things.

So, I’m excited about where we’re going from here.

This interview originally appeared on Safety Detectives with Shauli Zacks on January 4, 2024